138. Copy List with Random Pointer

Problem

Tags: Hash Table, Linked List

A linked list of length n is given such that each node contains an additional random pointer, which could point to any node in the list, or null.

Construct a deep copy of the list. The deep copy should consist of exactly n brand new nodes, where each new node has its value set to the value of its corresponding original node. Both the next and random pointer of the new nodes should point to new nodes in the copied list such that the pointers in the original list and copied list represent the same list state. None of the pointers in the new list should point to nodes in the original list.

For example, if there are two nodes X and Y in the original list, where X.random --> Y, then for the corresponding two nodes x and y in the copied list, x.random --> y.

Return the head of the copied linked list.

The linked list is represented in the input/output as a list of n nodes. Each node is represented as a pair of [val, random_index] where:

val: an integer representingNode.valrandom_index: the index of the node (range from0ton-1) that therandompointer points to, ornullif it does not point to any node.

Your code will only be given the head of the original linked list.

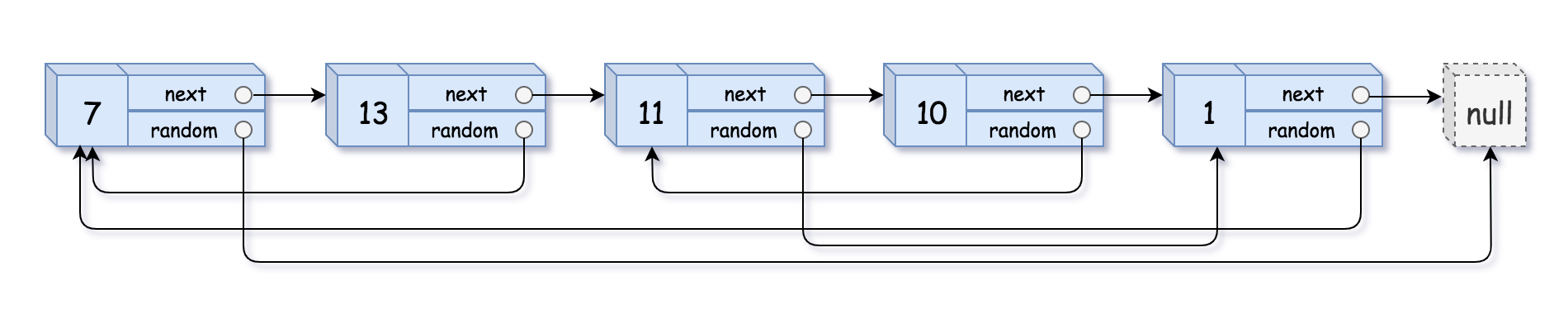

Example 1:

Input: head = [[7,null],[13,0],[11,4],[10,2],[1,0]]

Output: [[7,null],[13,0],[11,4],[10,2],[1,0]]

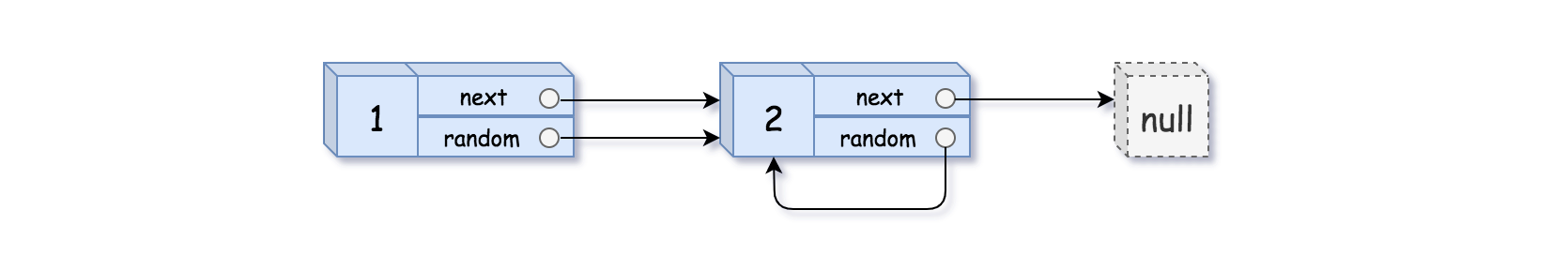

Example 2:

Input: head = [[1,1],[2,1]]

Output: [[1,1],[2,1]]

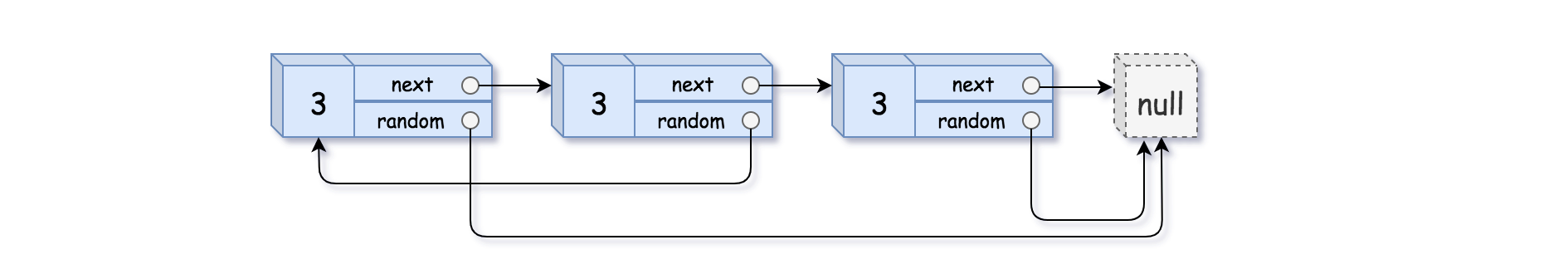

Example 3:

Input: head = [[3,null],[3,0],[3,null]]

Output: [[3,null],[3,0],[3,null]]

Constraints:

0 <= n <= 1000-10^4 <= Node.val <= 10^4Node.randomisnullor is pointing to some node in the linked list.

Solution

Solution

I use a hashtable to memorize if the node has been copied.

The size of the table is 100003, which is the smallest prime number after 100000. I think it is big enough, and the submission result has confirmed my assumption.

I found that many people use a interweave list solution, in my opinion, that is not a good method because it makes side-effect to the original list, which should not happen in a deep copy situation.

After further investigating, I found that

1111sized hashtable is large enough for this problem.

Code

C

// 138. Copy List with Random Pointer (1/28/54164)

// Runtime: 8 ms (82.95%) Memory: 8.40 MB (55.68%)

/**

* Definition for a Node.

* struct Node {

* int val;

* struct Node *next;

* struct Node *random;

* };

*/

#define HASH_SIZE 1111

struct Node* clone (struct Node* source, struct Node* created[]) {

if (source == NULL) {

return NULL;

}

int id = (int64_t)source % HASH_SIZE;

if (!created[id]) {

created[id] = (struct Node*)malloc(1 * sizeof(struct Node));

created[id]->val = source->val;

created[id]->next = clone(source->next, created);

created[id]->random = clone(source->random, created);

}

return created[id];

}

struct Node* copyRandomList (struct Node* head) {

struct Node* created[HASH_SIZE] = { NULL };

return clone(head, created);

}